Deep Learning at Edge

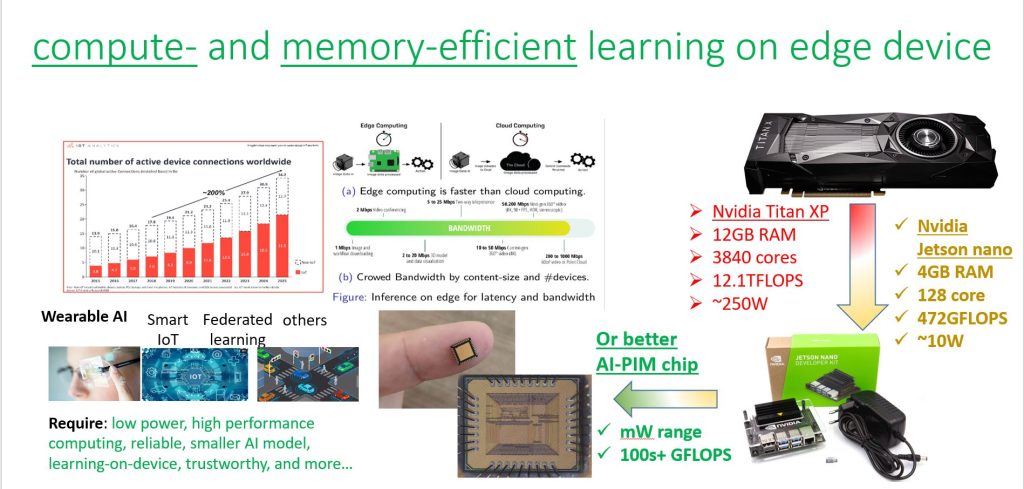

Deep Neural Network (DNN) is the state-of-the-art neural network computing model that successfully achieves close-to or better than human performance in many large scale cognitive applications, like computer vision, speech recognition, nature language processing, object recognition, etc. The most successful DNN is deep convolutional neural network consisting of multiple types of layers including convolution, activation, pooling and fully-connected layers. Typically, a DNN may have tens to thousands of layers to achieve optimized inference accuracy for practical applications, which makes it heavily memory (tens of GB working memory) and computing intensive (needs powerful CPU, GPU, FPGA, ASIC, etc.). Our research focus on:

- Explore automated and general methodologies to simultaneously reduce DNN model size and computing complexity, while maintaining state-of-the-art accuracy

- Explore how to design and deploy hardware-efficient DNN model in low power and resource limited mobile system, embedded system, IoT, edge devices for various applications, such as pattern recognition, object tracking/detection, etc.

- Explore memory- and computing-efficient on-device continual learning algorithm and system design

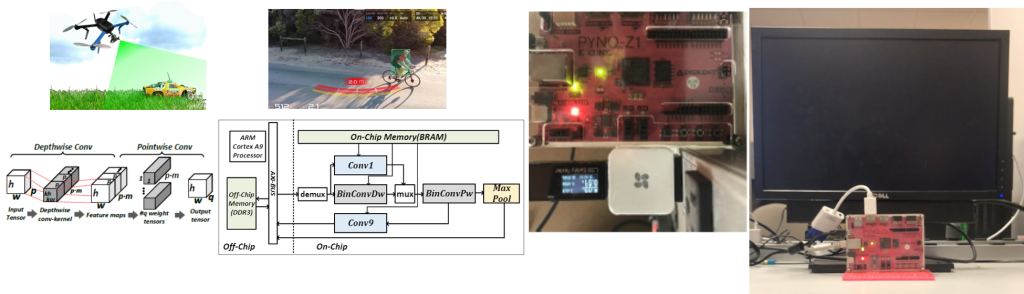

Real-time object tracking in an IoT FPGA based on the developed compressed deep neural network.

720P video, ~2W power, ~11FPS

Related publications :

- [NeurIPS’23] Jian Meng, Li Yang, Kyungmin Lee, Jinwoo Shin, Deliang Fan, and Jae-sun Seo, “Slimmed Asymmetrical Contrastive Learning and Cross Distillation for Lightweight Model Training,” Thirty-Seventh Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, Dec. 2023 [pdf]

- [NeurIPS’22] Li Yang*, Jian Meng*, Jae-sun Seo, and Deliang Fan, “Get More at Once: Alternating Sparse Training with Gradient Correction,” Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, Nov.29 – Dec.1, 2022 (* The first two authors contribute equally) [pdf]

- [NeurIPS’22] Sen Lin, Li Yang, Deliang Fan, and Junshan Zhang, “Beyond Not-Forgetting: Continual Learning with Backward Knowledge Transfer,” Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, Nov.29 – Dec.1, 2022 [pdf]

- [CVPR’22] Li Yang, Adnan Siraj Rakin, and Deliang Fan, “Rep-Net: Efficient On-Device Learning via Feature Reprogramming” IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, June 19-24, 2022 [pdf]

- [CVPR’22] Jian Meng, Li Yang, Jinwoo Shin, Deliang Fan, and Jae-sun Seo, “Contrastive Dual Gating: Learning Sparse Features With Contrastive Learning” IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, June 19-24, 2022 [pdf]

- [CVPR-ECV’22] Li Yang, Adnan Siraj Rakin, and Deliang Fan, “DA3 : Dynamic Additive Attention Adaption for Memory-Efficient On-Device Learning” Efficient Deep Learning for Computer Vision CVPR Workshop, , New Orleans, Louisiana, June 19-24, 2022 [pdf]

- [ICLR’22] Sen Li, Li Yang, Deliang Fan, and Junshan Zhang, “TRGP: Trust Region Gradient Projection for Continual Learning,” The Tenth International Conference on Learning Representations, (ICLR), Apr. 25- 29th , 2022 [pdf] (-spotlight-)

- [CVPR’21] Li Yang, Zhezhi He, Junshan Zhang and Deliang Fan, “KSM: Fast Multiple Task Adaption via Kernel-wise Soft Mask Learning” IEEE/CVF Computer Vision and Pattern Recognition (CVPR), June 19-25, 2021 [pdf]

- [AAAI’20] Li Yang, Zhezhi He and Deliang Fan, “Harmonious Coexistence of Structured Weight Pruning and Ternarization for Deep Neural Networks,” Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI), Feb. 7-12 2020, New York, USA [pdf] (spotlight)

- [AAAI’22] Jingbo Sun, Li Yang, Jiaxin Zhang, Frank Liu, Mahantesh Halappanavar, Deliang Fan, and Yu Cao, “Gradient-based Novelty Detection Boosted by Self-supervised Binary Classification,” Thirty-Six AAAI Conference on Artificial Intelligence (AAAI), Feb. 22-March 1, 2022, Vancouver, BC, Canada [Archived version]

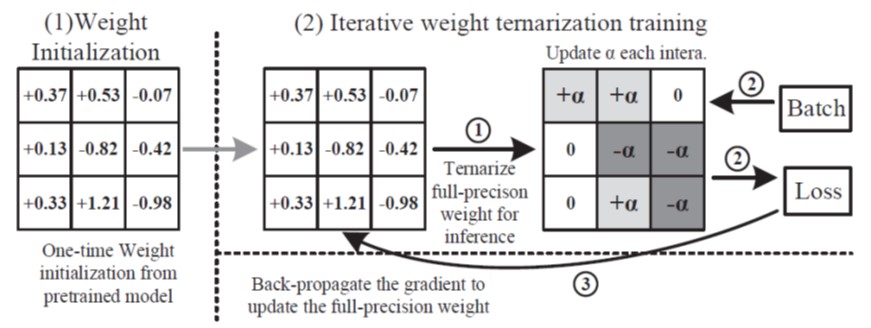

- [CVPR’19] Zhezhi He and Deliang Fan, “Simultaneously Optimizing Weight and Quantizer of Ternary Neural Network using Truncated Gaussian Approximation,” Conference on Computer Vision and Pattern Recognition (CVPR), June 16-20, 2019, Long Beach, CA, USA [pdf]

- [CVPR’19] Zhezhi He*, Adnan Siraj Rakin* and Deliang Fan, “Parametric Noise Injection: Trainable Randomness to Improve Deep Neural Network Robustness against Adversarial Attack,” Conference on Computer Vision and Pattern Recognition (CVPR), June 16-20, 2019, Long Beach, CA, USA (* The first two authors contributed equally) [pdf] [code in GitHub]

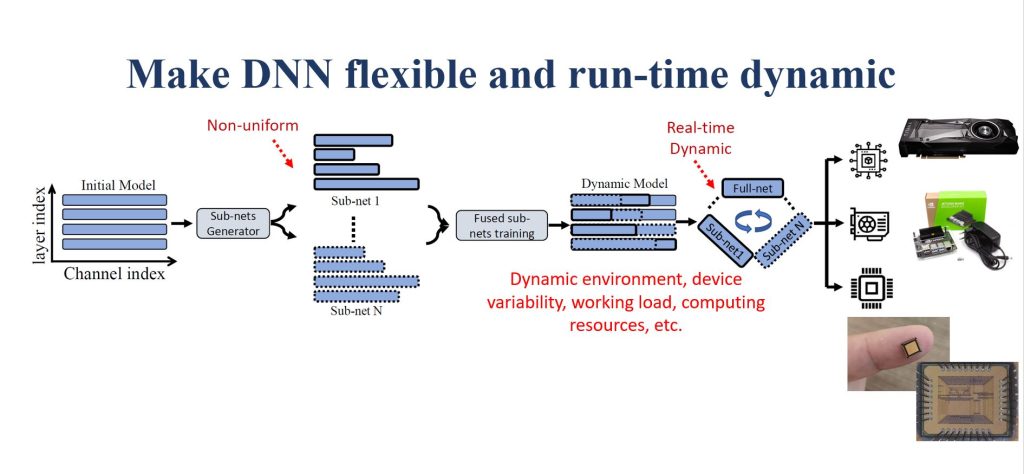

- [TNNLS’22] Li Yang, Zhezhi He, Yu Cao, and Deliang Fan, “A Progressive Sub-network Searching Framework for Dynamic Inference,” IEEE Transactions on Neural Networks and Learning Systems (TNNLS) , 2022, DOI: 10.1109/TNNLS.2022.3199703 [pdf]

- [TPAMI’21] Adnan Siraj Rakin, Zhezhi He, Jingtao Li, Fan Yao, Chaitali Chakrabarti and Deliang Fan, “T-BFA: Targeted Bit-Flip Adversarial Weight Attack,” IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021 [pdf]

- [TNNLS’21] Xiaolong Ma, Sheng Lin, Shaokai Ye, Zhezhi He, Linfeng Zhang, Geng Yuan, Sia Huat Tan, Zhenggang Li, Deliang Fan, Xuehai Qian, Xue Lin, Kaisheng Ma, and Yanzhi Wang, “Non-Structured DNN Weight Pruning – Is It Beneficial in Any Platform?,” IEEE Transactions on Neural Networks and Learning Systems (TNNLS), DOI: 10.1109/TNNLS.2021.3063265, 2021 [pdf]

- [USENIX Security’20] Fan Yao, Adnan Siraj Rakin and Deliang Fan, “DeepHammer: Depleting the Intelligence of Deep Neural Networks through Targeted Chain of Bit Flips,” In 29th USENIX Security Symposium (USENIX Security 20), August 12-14, 2020, Boston, MA, USA [pdf]

- [WACV’19] Zhezhi He, Boqing Gong, Deliang Fan, “Optimize Deep Convolutional Neural Network with Ternarized Weights and High Accuracy,” IEEE Winter Conference on Applications of Computer Vision, January 7-11, 2019, Hawaii, USA [pdf][code in GitHub]

- [ASPDAC’21] Li Yang, and Deliang Fan, “Dynamic Neural Network to Enable Run-Time Trade-off between Accuracy and Latency,” 26th Asia and South Pacific Design Automation Conference (ASPDAC), Jan. 18-21, 2021 (invited) [pdf]

- [SOCC’20] Li Yang, Zhezhi He, Shaahin Angizi and Deliang Fan, “Processing-In-Memory Accelerator for Dynamic Neural Network with Run-Time Tuning of Accuracy, Power and Latency,” 33rd IEEE International System-on-Chip Conference (SOCC), September 8-11, 2020 (invited) [pdf]

- [DAC’20] Li Yang, Zhezhi He, Yu Cao and Deliang Fan. “Non-uniform DNN Structured Subnets Sampling for Dynamic Inference”. In: 57th Design Automation Conference (DAC), San Francisco, CA, July 19-23, 2020 [pdf]

- [ASPDAC’20] Li Yang, Shaahin Angizi, Deliang Fan, “A Flexible Processing-in-Memory Accelerator for Dynamic Channel-Adaptive Deep Neural Networks,” Asia and South Pacific Design Automation Conference (ASP-DAC), Jan. 13-16, 2020, Beijing, China [pdf]

- [ISVLSI’19] Shaahin Angizi, Zhezhi He, Dayane Reis, Xiaobo Sharon Hu, Wilman Tsai, Shy Jay Lin and Deliang Fan, “Accelerating Deep Neural Networks in Processing-in-Memory Platforms: Analog or Digital Approach?,” IEEE Computer Society Annual Symposium on VLSI, 15 – 17 July 2019, Miami, Florida, USA (invited) [pdf]

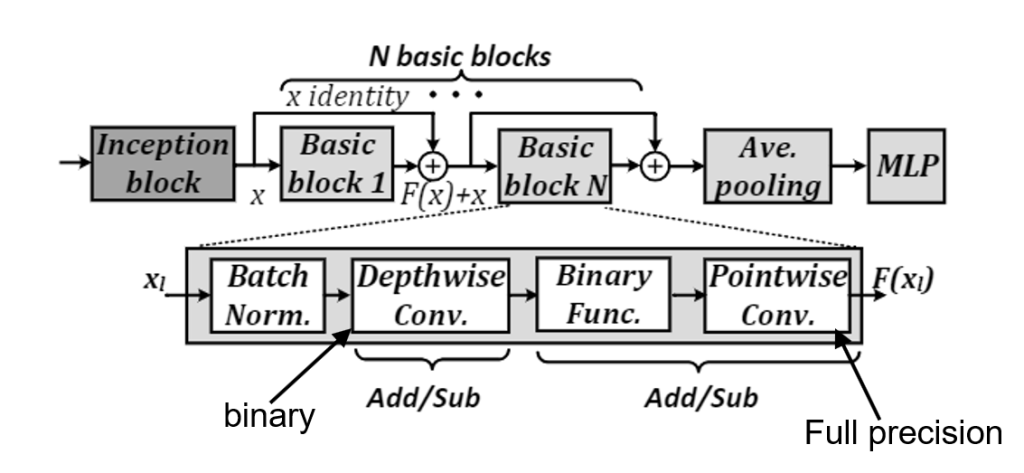

- [GLSVLSI’19] Li Yang, Zhezhi He and Deliang Fan, “Binarized Depthwise Separable Neural Network for Object Tracking in FPGA,” ACM Great Lakes Symposium on VLSI(GLSVLSI), May 9-11, 2019, Washington, D.C. USA [pdf]

- [ICCD’18] Adnan Siraj Rakin, Shaahin Angizi, Zhezhi He and Deliang Fan, “PIM-TGAN: A Processing-in-Memory Accelerator for Ternary Generative Adversarial Networks,” IEEE International Conference on Computer Design (ICCD) , Oct. 7-10, 2018, Orlando, FL, USA [pdf]

- [ICCAD’18] Shaahin Angizi, Zhezhi He and Deliang Fan, “DIMA: A Depthwise CNN In-Memory Accelerator,” IEEE/ACM International Conference on Computer Aided Design, Nov. 5-8, 2018, San Diego, CA, USA [pdf]

- [ISLPED’18] Li Yang, Zhezhi He and Deliang Fan, “A Fully Onchip Binarized Convolutional Neural Network FPGA Implementation with Accurate Inference,” ACM/IEEE International Symposium on Low Power Electronics and Design, July 23-25, 2018, Bellevue, Washington, USA [pdf]

- [ISVLSI’18] Zhezhi He, Shaahin Angizi, Adnan Siraj Rakin and Deliang Fan, “BD-NET: A Multiplication-less DNN with Binarized Depthwise Separable Convolution,” IEEE Computer Society Annual Symposium on VLSI, July 9-11, 2018, Hong Kong, CHINA [pdf] ( Best Paper Award)

- [DAC’18] Shaahin Angizi*, Zhezhi He*, Adnan Siraj Rakin and Deliang Fan, “CMP-PIM: An Energy-Efficient Comparator-based Processing-In-Memory Neural Network Accelerator,” IEEE/ACM Design Automation Conference (DAC), June 24-28, 2018, San Francisco, CA, USA (* The first two authors contributed equally) [pdf]

- [WACV’18] Y. Ding, L. Wang, D. Fan and B. Gong “A Semi-Supervised Two-Stage Approach to Learning from Noisy Labels,” IEEE Winter Conference on Applications of Computer Vision, March 12-14, 2018, Stateline, NV, USA [pdf]

- [JETC’20] Zhezhi He, Li Yang, Shaahin Angizi, Adnan Siraj Rakin and Deliang Fan, “Sparse BD-Net: A Multiplication-Less DNN with Sparse Binarized Depth-wise Separable Convolution,” ACM Journal on Emerging Technologies in Computing Systems, January 2020 Article No.: 15 https://doi.org/10.1145/3369391 [pdf]