AI Security: Defending and Harnessing the Bit-Flip based Adversarial Weight Attack

This repository contains a Pytorch implementation of the paper, titled “ Defending and Harnessing the Bit-Flip based Adversarial Weight Attack, ” which is published in CVPR-2019. It mainly discusses how to defend against memory bit-flip based adversarial weight attack.

[CVPR’20] Zhezhi He,Adnan Siraj Rakin, Jingtao Li, Chaitali Chakrabarti and Deliang Fan, “Defending and Harnessing the Bit-Flip based Adversarial Weight Attack,” 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 16-18, 2020, Seattle, Washington, USA [pdf]

Code is released at: https://github.com/elliothe/BFA

Abstract:

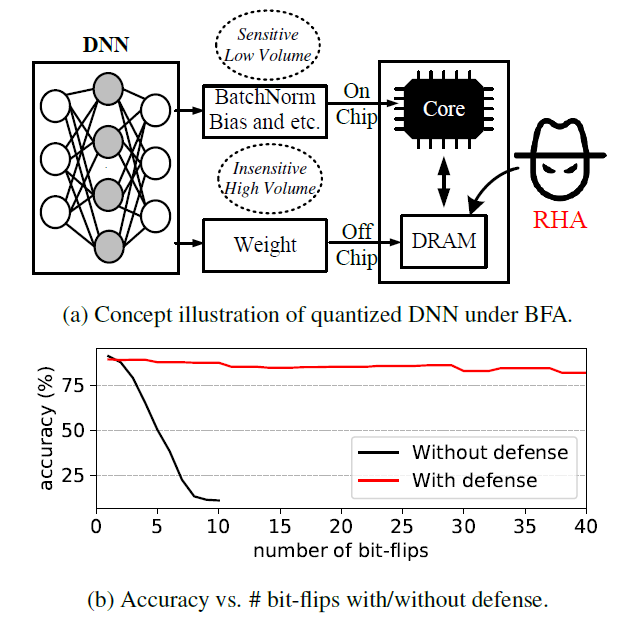

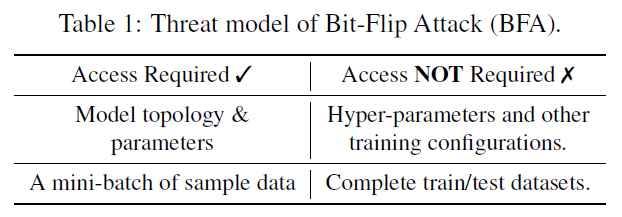

Recently, a new paradigm of the adversarial attack on the quantized neural network weights has attracted great attention, namely, the Bit-Flip based adversarial weight attack, aka. Bit-Flip Attack (BFA). BFA has shown extraordinary attacking ability, where the adversary can malfunction a quantized Deep Neural Network (DNN) as a random guess, through malicious bit-flips on a small set of vulnerable weight bits (e.g., 13 out of 93 millions bits of 8-bit quantized ResNet-18). However, there are no effective defensive methods to enhance the fault-tolerance capability of DNN against such BFA. In this work, we conduct comprehensive investigations on BFA and propose to leverage binarization-aware training and its relaxation – piece-wise clustering as simple and effective countermeasures to BFA. The experiments show that, for BFA to achieve the identical prediction accuracy degradation (e.g., below 11% on CIFAR-10), it requires 19.3× and 480.1× more effective malicious bitflips on ResNet-20 and VGG-11 respectively, compared to defend-free counterparts.